There’s a great series of online sci-fi short stories/novellas that got turned into a book called There is No Antimemetics Division1. The stories center around the idea that there are ideas too powerful to be known. To know them is to expose yourself as a target, because [spoiler]2. So, the only way to combat these ideas is to not spread them and not even know them, but somehow keep them in your unconscious mind regardless as you blindly work towards combating the danger they represent. Hence, they are anti memes: ideas designed not to be spread.

This is kind of how I think about emergent properties in biology. It’s one of the most troubling parts of biology: on a macro level, we deal with many of the cool biological adaptations I talk about in this blog, like how some langurs can drink seawater without dying or some fish can become cavefish. On a micro level, we deal with innumerable proteins and pathways. Connecting the two is difficult, as my longwinded blog posts show. We usually can’t fully figure out how the one leads to the other.

I’m not the first one to notice this, of course. This is one of the most fundamental problems in biology, going back to at least the discovery of DNA. So many of the tools that we’ve developed have been in pursuit of solving the connection between these two: gene knockout, gene overexpression, gene knockdown, gene jeans3, the list goes on and on.

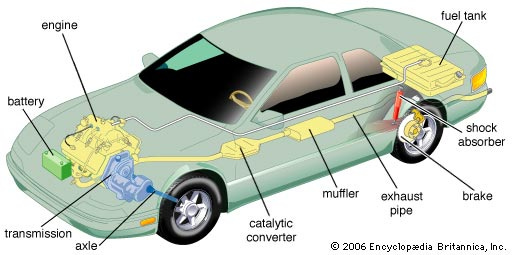

And yet, still, this connection remains impossible to bridge. We remain like people trying to understand a car (or a radio) by taking out random lugnuts and seeing if the car breaks. We still lack a holistic understanding of how the car is even entirely put together at rest, nevermind how it runs. It’s enough to drive you crazy if you think about it.

But, on the other hand, if you don’t think about it, it does kind of work. We do have some ideas on how DNA leads to proteins which leads to macro features. We can engineer flies, mice, and even humans on a DNA level to give them desirable phenotypes on a whole organism level, like a lack of sickle cell or beta cell thalassemia.

That’s why I say the problem of emergent properties in biology is kind of like an antimeme: if you don’t think about it too hard, it’s not a problem. We can fix the car: not completely, not if it’s totaled, not if it’s rusted, but we can fix it for certain important problems. We can sometimes even fix it from first principles, given our limited understanding of what those principles are. But then, if we stare at the whole car in its complexity and think about how to build it, we are paralyzed.

For a long time, we assumed the only way we can get past this hurdle is by brute forcing it. If we understood enough pathways and enough proteins, then slowly, slowly, we’d understand the cell. Then we’d understand the tissue, and the organ, and the body. This is, of course, not how we understood the car (no auto class starts by learning the position of every lug nut), but there didn’t seem to be any other way.

This seems to have changed now. The advent of deep learning models, like Alpha Fold, has allowed us to jump ahead and start seeing emergent properties in a number of systems, biological and not. And, in the process, it’s totally wrecked the automotive analogy, to the point where I’ve noticed most people still don’t realize how outdated it is. I mean, deep learning is basically the equivalent of an automotive manufacturing class that starts with giving the computer a bunch of data about different lugnuts and bolts in functioning cars, most importantly which ones are next to which, and then the computer puts together a digital model of a functioning car. And then, if you print out those lugnuts in the exact order in the real world, it self-assembles into a functioning car. We’ve gone way outside our original analogy, and we’re struggling to keep up.

This is a tricky situation! Good, but tricky. We’ve somehow gotten to the point where our models understand how the micro connects to the macro (albeit partially, and only for most proteins and some whole cells), but we don’t. But, actually, our models don’t understand it either, because understanding doesn’t come with the training process. They just do it. So, for some really important problems, the problem of emergence has been solved, but nobody can point to how or where or what it means.

This is a surprisingly ok set of affairs for a lot of purposes. David Baker’s lab has been doing a ton of really cool stuff involving designing proteins using these sorts of deep learning models, including designing enzymes and creating conditionally binding proteins. Meanwhile, Arc Institute has been working on designing prokaryotic cells starting from the genome level, including designing useful prokaryotic tools like CRISPR-Cas systems. These projects require an intimate understanding of deep learning models and their quirks, but they still don’t crack the black box open.

This is missing out on a big opportunity, though. It’s true that we don’t need to crack these black boxes open to do a lot of cool stuff. It’s even more true that there’s a ton of cool stuff left to do without cracking the black boxes open, as David Baker’s voluminous output post-Nobel Prize suggests (30+ papers and counting in 2024 alone).

But somewhere in that black box is some kind of understanding of how micro features relate to macro features. I’m not saying this decades-old problem is close to being solved, but we have a sudden new tool for trying to solve more of it. It’s a neat opportunity.

That’s why I’ve been excited by this rash of biological mechanistic interpretability work that’s popped up out of nowhere in the last few weeks. Mechanistic interpretability, if you’re unaware, is a special way of training these models so that their mechanics become, well, interpretable4. Once their mechanics are interpretable, it’s also possible to tweak their mechanics, and ratchet up or down pieces of the black box. So, we can actually better understand how these models understand biology.

I have to admit that the usefulness of this for biology took me by surprise. I had been vaguely aware of mechanistic interpretability from Anthropic’s “Golden Gate Claude” work, which showed that they could tweak their LLM, Claude, to become obsessed with the Golden Gate Bridge. I thought this was fun, but not academically interesting, because I’m not that interested in the inner workings of LLM. So, I forgot about it after playing around for a bit.

Then, about a month or two ago, I came across a great, long essay by Adam Green, “Through A Glass Darkly”, in which he claimed to have created a virtual cell by training a model on single-cell gene count data, or the counts of genes expressed inside individual cells. This allowed his model to learn which genes co-express in which cells and tissues, and predict which genes will tend to co-express in new cells and tissues5.

Off the bat, I was admittedly skeptical of his claim that this gene count data constitutes a virtual cell, and in some respects I still am. A cell, after all, isn’t just a static collection of genes that have been expressed. A cell is a living, changing thing that obeys the laws of physics (especially fluid dynamics). Even the magic of deep learning can’t change that.

However, I kept reading. Adam explained that, once he built his “virtual cell”, he also built a “virtual microscope” to peer inside the cell. This, as you probably guess, was his mechanistically interpretable model. He argued that, when his model learned which genes tended to co-express in new cells and tissues, it was actually learning how the expression of genes would change as cells or tissues themselves changed.

That is, even though the model was trained on static, flat snapshots, it learned how the snapshots would change when any piece of the snapshot would change. This meant that his model somehow understood the life of the cell, and all that was left to do was interrogate it. If we interrogated it, that meant we could learn from it what would happen in different scenarios. In other words, we could run virtual experiments.

I was so taken aback by this idea that I felt that I had to understand it better before reaching a conclusion. I reached out to some friends who are better versed in this sort of modeling than I am (like owl posting and Stephen Malina), and also reached out to Adam directly. It took a while, but I think I finally get what Adam really did, what the promises of this idea are, and what the limitations are.

Let me begin, as I like to do, with an analogy. Imagine you are someone who’s never been to Hawaii. In fact, you don’t know anything about Hawaii, or anything about the world in general. You have lived your entire life in a windowless room, lit only by a single lightbulb. One day, someone passes a bunch of photos of Hawaii underneath your door. It shows all parts of Hawaii, including the cities, the beaches, and the airport. These photos are weird: they are amalgamations of all times and weather conditions, which is not problematic for understanding the airport photos but is more problematic for trying to understand the beach ones, as storms, sunny days, sunbathing, and storm surges combine into one complicated image.

The rest of this post continues my Hawaii analogy and talks about it in relation to Adam Green’s work and what it means for the problem of emergence.

Keep reading with a 7-day free trial

Subscribe to Trevor Klee’s Newsletter to keep reading this post and get 7 days of free access to the full post archives.